Can AI Can Accelerate Scientific Research?

by Corin Wagen · Apr 2, 2025

Update: This paper has been retracted. We are leaving this blog post up for historical reasons, but the data presented here should not be viewed as trustworthy or authoritative. Read the full statement from MIT: https://economics.mit.edu/news/assuring-accurate-research-record.

Despite the current ubiquity of artificial intelligence, many scientists remain skeptical. Generative AI models have made waves in image and language domains, but their relevance to real-world research often seems unclear. Can these models really help with something as complex and domain-specific as chemical discovery?

A new study by Aidan Toner-Rodgers at MIT provides a rigorous, large-scale evaluation of the impact of machine learning on scientific research. Toner-Rodgers evaluates what happened when a large U.S. industrial R&D lab introduced an AI-powered materials discovery tool to over a thousand researchers. The results are surprisingly clear: AI can dramatically accelerate innovation, but only for scientists with the domain expertise to guide it.

Aiden Toner-Rodgers, an MIT economics Ph.D. student.

The Effect of AI on Materials R&D

The AI tool studied in Toner-Rodgers's work was a graph neural network (GNN)-based diffusion model trained to generate candidate materials that were predicted to have specific properties. In this study, the researchers used it for inverse design—providing target features and receiving plausible structures in return. The company rolled the model out in waves across 1,018 scientists, allowing for a controlled and large-scale study of AI's impact over almost two years. (The exact nature of the company and the model are, sadly, confidential.)

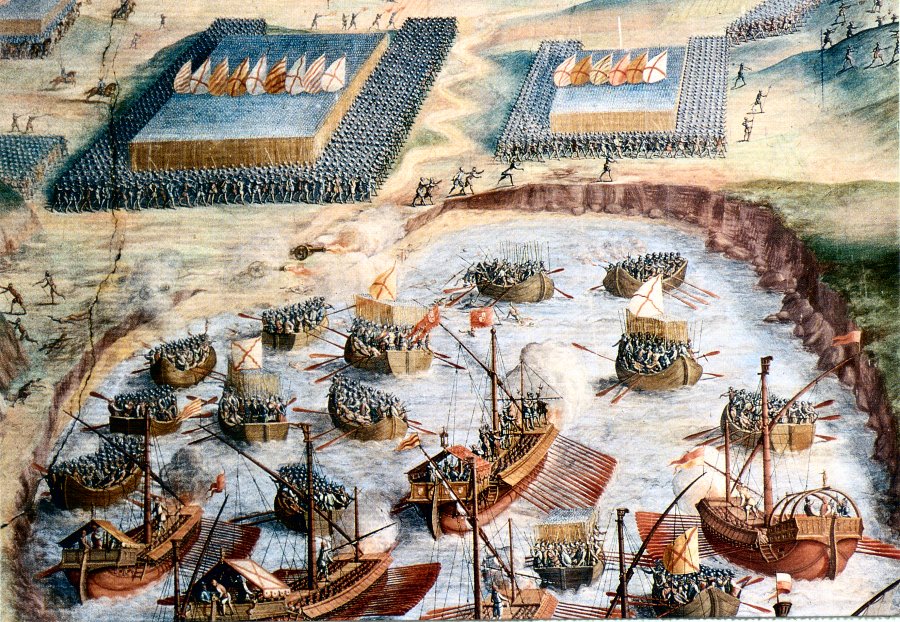

The results are striking. Researchers who gained access to the model discovered 44% more materials, filed 39% more patents, and produced 17% more product prototypes. Adoption of the AI model leads to a clear step change in materials discovery and patent filings after about six months, while the increase in product prototypes took over a year to appear. This makes sense, as prototypes are downstream of patents and new materials.

Figure 5 from Toner-Rodgers's paper, showing the impact of introducing the AI model over time.

These graphs don't just show a flood of "AI slop" overrunning the materials discovery pipeline—as far as Toner-Rodgers can quantify, the discoveries were also better than human-only discoveries. The materials were superior in quality (as assessed by similarity to the researchers' desired properties) and showed significantly greater novelty, both structurally and in downstream patents. For instance, patents filed by AI-assisted scientists used more novel technical terminology, an early marker of transformative innovation.

One concern with applying ML to scientific domains is the so-called "streetlight effect": the idea that models might just guide us toward what we already know and disfavor truly novel research. But in this case, AI-enabled teams produced more distinct materials and more new product lines, not just incremental tweaks. This suggests that the model actually helped researchers explore new territory in materials design space, although a full treatment of this question will require further research.

Although the full effect of incorporating AI took a substantial amount of time, the effect on the organization was substantial. Overall, Toner-Rodgers estimates that introducing this single AI model improved overall R&D efficiency by 13–15%, even after model training costs are taken into account. This productivity boost would be extraordinary in any company, let alone an organization with over a thousand researchers.

AI Shifts Scientific Bottlenecks

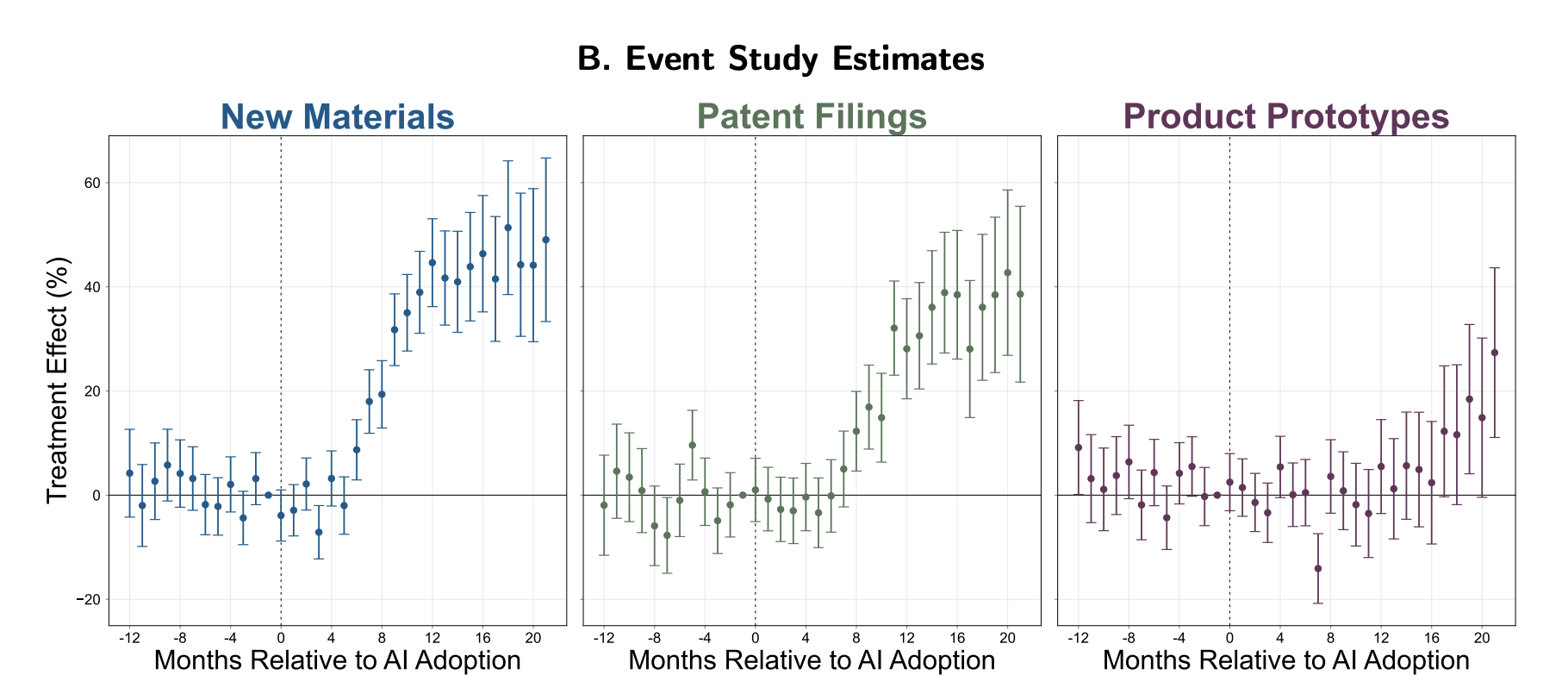

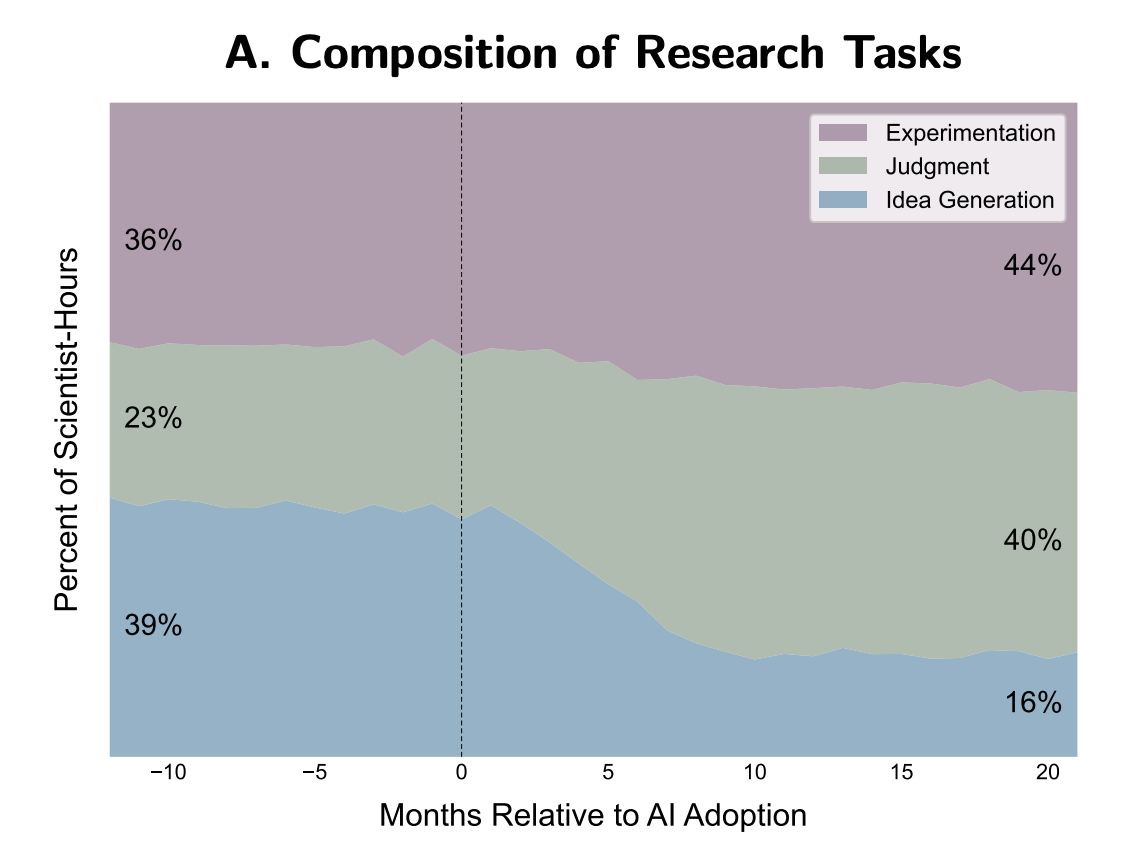

Scientists' task logs also showed a dramatic reallocation of effort: AI automated about 57% of the idea-generation process, freeing researchers to focus on evaluating and testing candidate materials—areas where domain knowledge is essential.

Figure 8 from Toner-Rodgers's paper, showing the impact of introducing the AI model on researcher activities.

Here's how Toner-Rodgers summarizes this finding:

While [AI] replaces labor in the specific activity of designing compounds, it augments labor in the broader discovery process due to its complementarity with evaluation tasks.

This phenomenon is perhaps unsurprising: the ML model studied here was capable of generating new candidate materials, so less human time was spent generating candidates and more time was allocated to evaluation these candidates. It's interesting to imagine what might happen if a second ML model capable of candidate evaluation were added—might it be possible to produce compounding productivity increases?

Human Expertise Still Matters

Critically, introduction of the ML model didn't help all of the scientists equally. The top third of scientists nearly doubled their output, while the bottom third saw little change. This can be traced to differences in how these scientists employed the models. The best performers used their domain expertise to filter the flood of model-suggested candidates, avoiding time sinks on unstable or irrelevant compounds. In contrast, less experienced users often tested the model's suggestions at random—burning resources on dead ends.

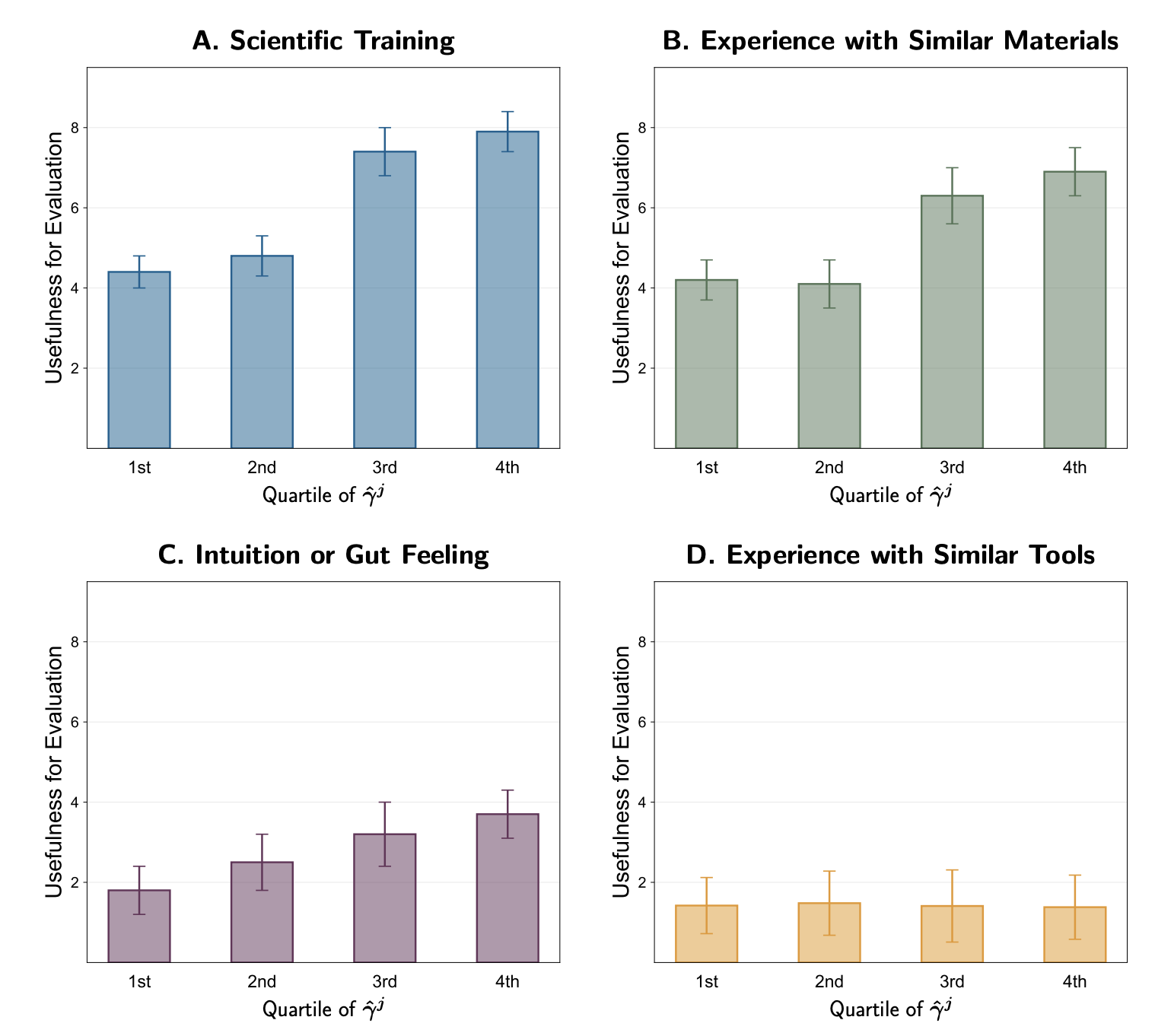

This divide can be further studied by examining which forms of expertise proved most useful for judging candidate materials generated by AI. Scientific training proved to be the most important, followed by previous in-field experience and raw intuition, while experience with other ML tools proved unimportant. Paradoxically, this implies that the advent of AI tools makes domain knowledge and scientific intuition more important, not less.

Figure 12 from Toner-Rodgers's paper, showing which forms of expertise proved useful in working with the AI model.

This finding complements earlier work suggesting that while machine prediction is improving rapidly, human evaluation and decision-making are still critical to success. One of the study's most striking findings is that "only scientists with sufficient expertise can harness the power of AI." The need for scientific thinking hasn't disappeared; it's simply shifted downstream to judgment and interpretation.

What About Real-World Impact?

Of course, increased patents and prototypes don't automatically mean real-world success. Still, there are reasons to take these results seriously: patent filings require novelty, utility, & non-obviousness, and product prototypes represent a substantial corporate expenditure and a degree of human validation & trust. We won't know the full impact of these discoveries for years—but for those trying to assess whether AI can unlock new chemical space, this study represents unusually rigorous evidence that it can.

Unfortunately, not all impacts were positive. In follow-up surveys, 82% of scientists reported reduced job satisfaction. Even those who benefited the most cited skill underutilization and a decline in creativity as top concerns. AI may accelerate discovery, but many researchers felt alienated by the new workflow. Still, scientists' belief in AI's productivity-enhancing potential nearly doubled after using the model. The vast majority reported plans to reskill, anticipating a future in which the traits needed to excel in scientific research will shift.

Conclusions

This study offers some of the clearest empirical evidence to date that AI can accelerate real-world scientific discovery, especially in chemistry and materials science. But it also highlights an important nuance. AI doesn't replace scientific experts—instead, it makes them exponentially more valuable.

At Rowan, we're building the ML-native design and simulation platform for chemistry, drug discovery, and materials science. We believe that the future of scientific discovery requires both humans and AI models working in tandem, as described in Toner-Rodgers's paper, and are working to build software to make this transition possible. If you're a scientist looking to excel in the age of AI-powered research, come check out what we're building!