The Invisible Work of Computer-Assisted Drug Design

by Corin Wagen · Aug 28, 2025

The Stone Breakers, Gustave Courbet (1849)

Scientists who work in computer-assisted drug discovery (CADD) must be comfortable with a vast variety of skills. In modern drug-design organizations, CADD scientists are responsible for a large and ever-growing list of responsibilities:

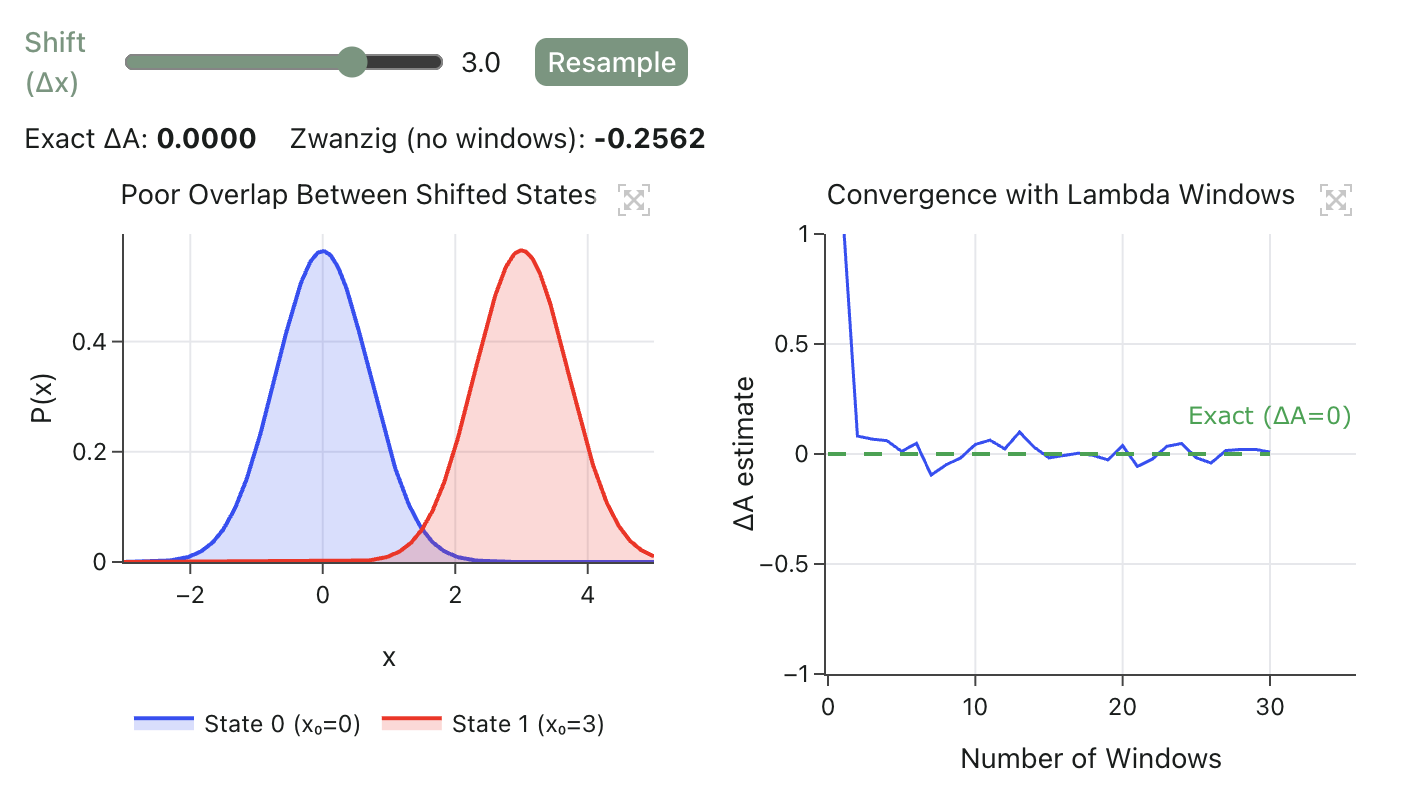

- They are experts in using a variety of molecular modeling software, including docking, molecular dynamics, free-energy perturbation, and quantum chemistry.

- They must interface with medicinal chemists and understand which compounds are practical and efficient to synthesize.

- They frequently work to organize and visualize data, either through visual plots or through building data-driven QSAR/QSPR models.

- Many modeling teams work closely with structural biology to build more accurate structural models.

- Increasingly, they are also asked to be comfortable with training, benchmarking, and deploying machine-learning models.

The diversity of software tools required for state-of-the-art computational drug design means that scientists often spend a surprising fraction of their time away from actual drug design. Jim Snyder, a "world-class modeler and scientist" with "high scientific success in academia and industry" (per Ash Jogalekar), wrote a fascinating overview of the state of computer-assisted drug design in the 1980s. Here's what he wrote about this particular topic (emphasis added):

On the invisible side of the ledger—about 30-50% of the group's time—is the effort that permits the CADD group to maintain state-of-the-art status. In the current late 1980s-early 1990s environment, major software packages often incorporating new methodology are generally purchased from commercial vendors. These are now generally second or third generation, sophisticated and expensive ($50,000–150,000). Still, no commercial house can anticipate all the needs of a given applications' environment. It remains necessary to treat problems specific to a given research project and to locally extend known methodology. This means that new capabilities delivered in advanced versions of commercial software need careful evaluation.

Although Snyder was writing about the 1980s, his observations are no less true today. Commercial software solutions must be evaluated, benchmarked, and tested on internal data—a process which is slow and time-consuming. The problem is even worse for academic code, whose authors often have little experience with industry use cases or conventional software practices.

The rise of machine learning has made the work of benchmarking and internal validation even more important, particularly as public benchmarks become contaminated by data leakage and overfitting. A recent study benchmarking DiffDock by Ajay Jain, Ann Cleves, and Pat Walters discusses the time and effort that the CADD community collectively spends benchmarking new methods (emphasis added):

Publication of studies such as the DiffDock report are not cost-free to the CADD field. Magical sounding claims generate interest and take time for groups to investigate and debunk. Many groups must independently test and understand the validity of such claims. This is because most groups, certainly those focused primarily on developing new drugs, do not have the time to publish extensive rebuttals such as this. Therefore their effort in validation/debunking is replicated many fold. The waste of time and effort is substantial, and the process of drug discovery is difficult enough without additional unnecessary challenges.

Even when authors report high-quality benchmarks and clearly disclose when a method will and won't work, considerable work remains before a given method can be integrated into production CADD usage. Most scientific tasks require more than a single computation or model-inference step, necessitating integration into a larger software ecosystem. (I wrote about this in the context of ML-powered workflows previously.) Building this state-of-the-art software infrastructure can still be challenging, as Snyder describes (emphasis added):

No single piece of software is ordinarily sufficient to address a routine but multistep modeling task. For example, conformation generation, optimization, and least-squares fitting can involve three separate computer programs. The XYZ coordinate output from the first is the input for the second; output from the latter is input for the third. With an evolving library of 40-50 active codes, the task of assuring comprehensive and smooth coordinate interconversion is a demanding and ongoing one.

Most scientific software tools don't make integration easy. Modern packaging and code-deployment processes are rarely followed in science, forcing the CADD practitioner to go through the painful and time-consuming task of manually creating a minimal environment capable of running a given model or algorithm.

For methods requiring specialized hardware like GPUs, things become still more complex—and some modern methods, like protein–ligand co-folding, require external resources like a MSA server which must be provisioned, creating additional opportunities for failure. Solving all these issues requires CADD scientists to essentially become "ML DevOps" experts, a skillset which most do not naturally have.

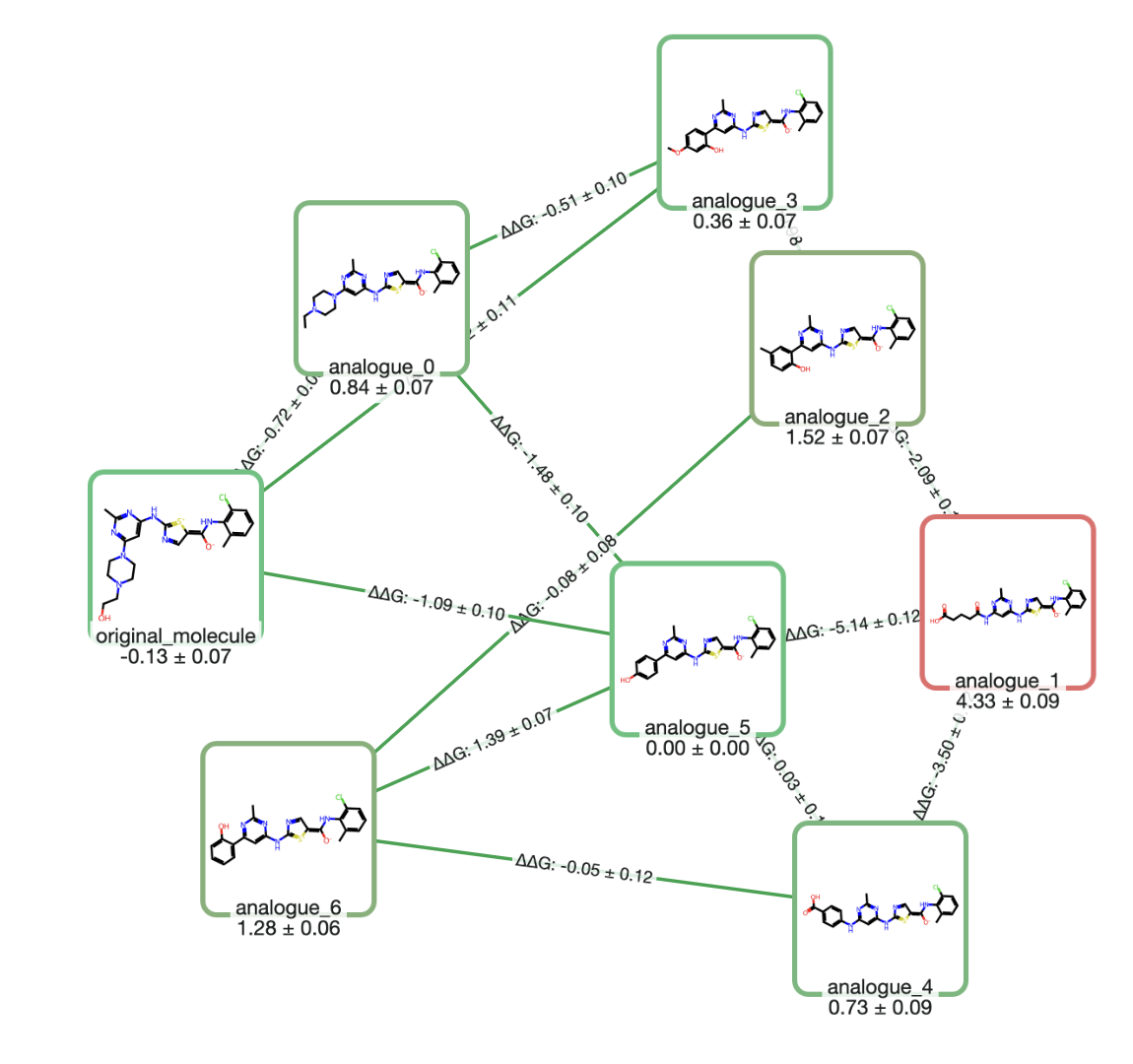

Building tools to run calculations is only half the problem. To be impactful, CADD scientists must also integrate their predictions into the experimental design–make–test–analyze cycle, which necessitates communicating results with medicinal chemists. Many large pharmaceutical companies have invested in building some sort of internal graphical platform to simplify communication and allow scientists across the organization to run and view calculations, but these platforms are often costly to maintain and accumulate technical debt quickly. (We've talked to a lot of teams that had a fantastic internal platform for running calculations until the maintainer switched roles and left the platform to die a slow and ignominious death.)

At Rowan, we're working to build a CADD platform that addresses all these issues. Our goal is to help scientists stop worrying about software issues and free them up to focus on their science, helping to cut down on the amount of invisible work that goes into CADD and letting our users do what they're good at. Here's what we do:

- Benchmarking. We develop and run benchmarks for machine-learning models and workflows, like this benchmark for strained energy predictions or this benchmark for bond-dissociation-energy predictions. We also run and host benchmarks for neural network potentials at benchmarks.rowansci.com.

- Validation. We automatically apply sanity checks like PoseBusters to our results, highlighting cases in which computational predictions might not be accurate.

- Useful workflows. We build high-level workflows validated against experimental data, helping scientists to easily compare the performance of our methods to alternatives. See, for instance, our solutions for hydrogen-bond-donor/acceptor-strength prediction, macroscopic pKa prediction and blood–brain-barrier penetration, and bond-dissociation energy.

- Engineering. We automatically handle cloud deployment and engineering, making it simple for our users to scale from a single test calculation to tens of thousands of jobs.

- Integration. Rowan's Python API makes integration with other software packages easy; we return data as validated Python objects with useful methods. You can plug Rowan into any existing workflows with a few lines of code—and, since we don't charge for API access, anyone can try out these integrations without needing to be a Rowan customer.

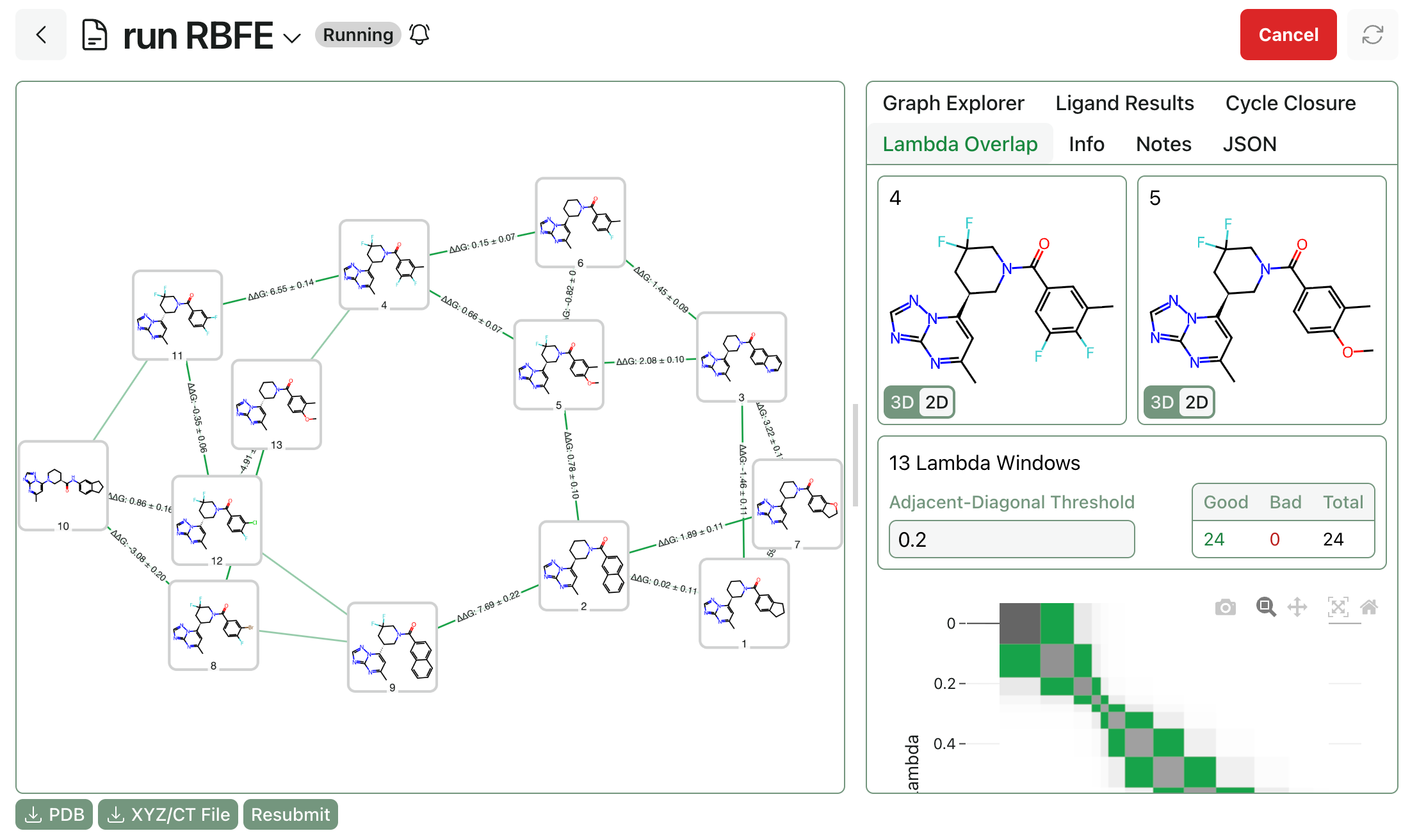

- Sharing results. Rowan's graphical user interface makes it easy to collaborate and share calculations with other scientists in your organization, or even for medicinal chemists to run calculations themselves.

Building a top-tier CADD team used to mean spending millions on software licenses and developers to build a bespoke internal platform; with Rowan, we're building this platform for all our customers. If you'd like to be one of them, make an account or reach out to our team!